I am trying to train one such sample.

X= [ [1 2]

[1 2]

[2 2]]

y = [5, 5 , 8]

And I am trying try to use the code below to train the sample.

reg=XGBRegressor(max_depth=2,learning_rate=1.0, n_estimators=2,silent=False,objective=‘reg:linear’)

reg.fit(X,y)

plot_tree(reg,num_trees = 0)

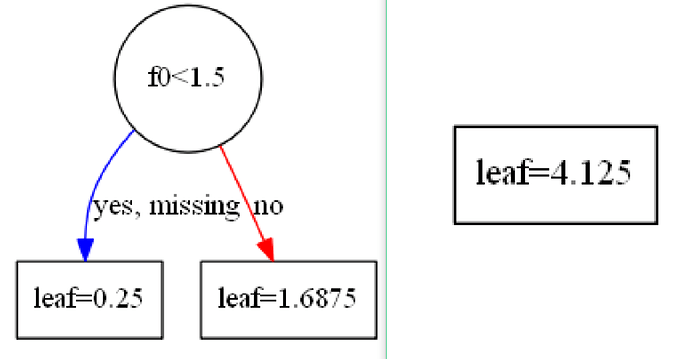

Then i got two trees below.

Then I got into confusion when I entered a test sample X_test=[1,2], because

reg.predict(X_test) #print score = 4.875

Score with the trees structure above: 0.25 + 4.125 = 4.375

Why? Is there anything wrong with my operation?