Hi there. This is an old topic, but as it is the closest one to my questions, I am writing here.

I would like to ask if there are any intuitions/interpretations behind weighting instances with hessians? For squared difference, hessians equals to 1 and you can think of the coverage/weights as the size of the node (expressed in terms of the number of instances). But are there natural (such that could be understood without going deep into maths and loss function derivatives) interpretations for other loss functions?

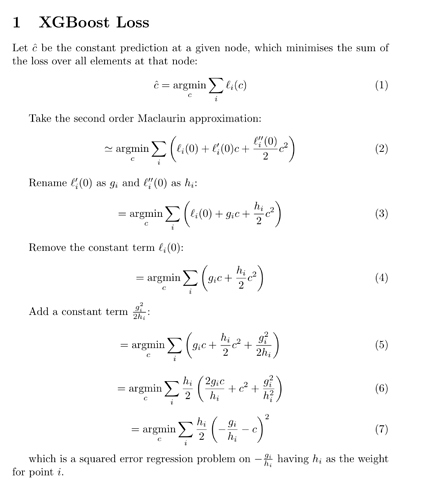

I also would like to ask about the fragment of the “XGBoost: A Scalable Tree Boosting System” paper that refers to hessians used as weights:

To see why $h_i$ represents the weight, we can rewrite Eq (3) as $\sum_{i=1}^n \frac{1}{2}h_i(f_t(x_i) - \frac{g_i}{h_i})^2 + \Omega(f_t) + constant$

I’m probably missing something, but is this formula correct? I mean, referring only to the summation terms, shouldn’t it be rather $\frac{1}{2}h_i(f_t(x_i) - (- \frac{g_i}{h_i}))^2$, i.e., “-(-g_i/h_i)” instead of just “-g_i/h_i”?