Dear Community,

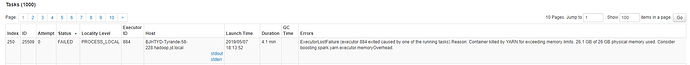

I was using XGboost (v0.82)on Yarn and got this problem: with the same resource, model crashed during foreach when I using ‘tree_method’=hist and “grow_policty”=“losswise”. All the other properties are the same when I use “tree_method” = exact. Can somebody help with this?

Log on Driver:

19/05/07 18:18:06 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:06,781 DEBUG Recieve recover signal from 236

19/05/07 18:18:06 WARN TransportChannelHandler: Exception in connection from /10.198.155.165:15338

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:192)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380)

at io.netty.buffer.PooledUnsafeDirectByteBuf.setBytes(PooledUnsafeDirectByteBuf.java:288)

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1106)

at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:343)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:123)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:645)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

19/05/07 18:18:06 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:06,862 DEBUG Recieve recover signal from 179

19/05/07 18:18:06 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:06,943 DEBUG Recieve recover signal from 176

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,023 DEBUG Recieve recover signal from 133

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,103 DEBUG Recieve recover signal from 122

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,183 DEBUG Recieve recover signal from 139

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,264 DEBUG Recieve recover signal from 99

19/05/07 18:18:07 WARN TransportChannelHandler: Exception in connection from /10.198.124.130:22028

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:192)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380)

at io.netty.buffer.PooledUnsafeDirectByteBuf.setBytes(PooledUnsafeDirectByteBuf.java:288)

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1106)

at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:343)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:123)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:645)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,344 DEBUG Recieve recover signal from 154

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,521 DEBUG Recieve recover signal from 228

19/05/07 18:18:07 INFO YarnSchedulerBackend$YarnDriverEndpoint: Disabling executor 776.

19/05/07 18:18:07 INFO DAGScheduler: Executor lost: 776 (epoch 18)

19/05/07 18:18:07 INFO BlockManagerMasterEndpoint: Trying to remove executor 776 from BlockManagerMaster.

19/05/07 18:18:07 WARN BlockManagerMasterEndpoint: No more replicas available for rdd_4313_242 !

19/05/07 18:18:07 WARN BlockManagerMasterEndpoint: No more replicas available for rdd_4313_730 !

19/05/07 18:18:07 INFO BlockManagerMasterEndpoint: Removing block manager BlockManagerId(776, BJHTYD-Tyrande-92-233.hadoop.jd.local, 10315, None)

19/05/07 18:18:07 INFO BlockManagerMaster: Removed 776 successfully in removeExecutor

19/05/07 18:18:07 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Requesting driver to remove executor 752 for reason Container killed by YARN for exceeding memory limits. 26.2 GB of 26 GB physical memory used. Consider boosting spark.yarn.executor.memoryOverhead.

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,601 DEBUG Recieve recover signal from 105

19/05/07 18:18:07 ERROR YarnScheduler: Lost executor 752 on BJHTYD-Tyrande-157-36.hadoop.jd.local: Container killed by YARN for exceeding memory limits. 26.2 GB of 26 GB physical memory used. Consider boosting spark.yarn.executor.memoryOverhead.

19/05/07 18:18:07 WARN TaskSetManager: Lost task 207.0 in stage 48.0 (TID 25466, BJHTYD-Tyrande-157-36.hadoop.jd.local, executor 752): ExecutorLostFailure (executor 752 exited caused by one of the running tasks) Reason: Container killed by YARN for exceeding memory limits. 26.2 GB of 26 GB physical memory used. Consider boosting spark.yarn.executor.memoryOverhead.

19/05/07 18:18:07 INFO DAGScheduler: Executor lost: 752 (epoch 18)

19/05/07 18:18:07 INFO BlockManagerMasterEndpoint: Trying to remove executor 752 from BlockManagerMaster.

19/05/07 18:18:07 WARN BlockManagerMasterEndpoint: No more replicas available for rdd_4313_1282 !

19/05/07 18:18:07 WARN BlockManagerMasterEndpoint: No more replicas available for rdd_4313_1428 !

19/05/07 18:18:07 INFO BlockManagerMasterEndpoint: Removing block manager BlockManagerId(752, BJHTYD-Tyrande-157-36.hadoop.jd.local, 14545, None)

19/05/07 18:18:07 INFO BlockManagerMaster: Removed 752 successfully in removeExecutor

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,681 DEBUG Recieve recover signal from 174

19/05/07 18:18:07 WARN TransportChannelHandler: Exception in connection from /10.198.58.226:52944

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:192)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380)

at io.netty.buffer.PooledUnsafeDirectByteBuf.setBytes(PooledUnsafeDirectByteBuf.java:288)

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1106)

at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:343)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:123)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:645)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

19/05/07 18:18:07 WARN TransportChannelHandler: Exception in connection from /10.198.150.106:39366

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:192)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380)

at io.netty.buffer.PooledUnsafeDirectByteBuf.setBytes(PooledUnsafeDirectByteBuf.java:288)

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1106)

at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:343)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:123)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:645)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,762 DEBUG Recieve recover signal from 138

19/05/07 18:18:07 INFO DFSClient: Could not complete /user/spark/log/application_1551338088092_5681270.lz4.inprogress retrying…

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,843 DEBUG Recieve recover signal from 114

19/05/07 18:18:07 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:07,924 DEBUG Recieve recover signal from 112

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,005 DEBUG Recieve recover signal from 50

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,085 DEBUG Recieve recover signal from 107

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,165 DEBUG Recieve recover signal from 157

19/05/07 18:18:08 INFO DAGScheduler: Job 30 failed: foreachPartition at XGBoost.scala:397, took 4047.180230 s

19/05/07 18:18:08 ERROR RabitTracker: Uncaught exception thrown by worker:

org.apache.spark.SparkException: Job 30 cancelled because SparkContext was shut down

at org.apache.spark.scheduler.DAGScheduler$$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:837)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:835)

at scala.collection.mutable.HashSet.foreach(HashSet.scala:78)

at org.apache.spark.scheduler.DAGScheduler.cleanUpAfterSchedulerStop(DAGScheduler.scala:835)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onStop(DAGScheduler.scala:1902)

at org.apache.spark.util.EventLoop.stop(EventLoop.scala:83)

at org.apache.spark.scheduler.DAGScheduler.stop(DAGScheduler.scala:1815)

at org.apache.spark.SparkContext$$anonfun$stop$8.apply$mcV$sp(SparkContext.scala:1940)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1361)

at org.apache.spark.SparkContext.stop(SparkContext.scala:1939)

at org.apache.spark.TaskFailedListener$$anon$1$$anonfun$run$1.apply$mcV$sp(SparkParallelismTracker.scala:131)

at org.apache.spark.TaskFailedListener$$anon$1$$anonfun$run$1.apply(SparkParallelismTracker.scala:131)

at org.apache.spark.TaskFailedListener$$anon$1$$anonfun$run$1.apply(SparkParallelismTracker.scala:131)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:58)

at org.apache.spark.TaskFailedListener$$anon$1.run(SparkParallelismTracker.scala:130)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:642)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2063)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2084)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2103)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2128)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:935)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1.apply(RDD.scala:933)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.foreachPartition(RDD.scala:933)

at ml.dmlc.xgboost4j.scala.spark.XGBoost$$anonfun$trainDistributed$1$$anon$1.run(XGBoost.scala:397)

19/05/07 18:18:08 INFO DAGScheduler: ResultStage 48 (foreachPartition at XGBoost.scala:397) failed in 255.510 s due to Stage cancelled because SparkContext was shut down

19/05/07 18:18:08 INFO YarnClientSchedulerBackend: Interrupting monitor thread

19/05/07 18:18:08 INFO YarnClientSchedulerBackend: Shutting down all executors

19/05/07 18:18:08 INFO YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down

19/05/07 18:18:08 INFO SchedulerExtensionServices: Stopping SchedulerExtensionServices

(serviceOption=None,

services=List(),

started=false)

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,245 DEBUG Recieve recover signal from 158

19/05/07 18:18:08 INFO YarnClientSchedulerBackend: Stopped

19/05/07 18:18:08 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

19/05/07 18:18:08 INFO MemoryStore: MemoryStore cleared

19/05/07 18:18:08 INFO BlockManager: BlockManager stopped

19/05/07 18:18:08 INFO BlockManagerMaster: BlockManagerMaster stopped

19/05/07 18:18:08 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

19/05/07 18:18:08 INFO SparkContext: Successfully stopped SparkContext

19/05/07 18:18:08 ERROR TransportResponseHandler: Still have 1 requests outstanding when connection from /10.198.152.98:23182 is closed

19/05/07 18:18:08 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to get executor loss reason for executor id 776 at RPC address 10.198.92.233:20592, but got no response. Marking as slave lost.

java.io.IOException: Connection from /10.198.152.98:23182 closed

at org.apache.spark.network.client.TransportResponseHandler.channelInactive(TransportResponseHandler.java:146)

at org.apache.spark.network.server.TransportChannelHandler.channelInactive(TransportChannelHandler.java:108)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:245)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:231)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelInactive(AbstractChannelHandlerContext.java:224)

at io.netty.channel.ChannelInboundHandlerAdapter.channelInactive(ChannelInboundHandlerAdapter.java:75)

at io.netty.handler.timeout.IdleStateHandler.channelInactive(IdleStateHandler.java:277)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:245)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:231)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelInactive(AbstractChannelHandlerContext.java:224)

at io.netty.channel.ChannelInboundHandlerAdapter.channelInactive(ChannelInboundHandlerAdapter.java:75)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:245)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:231)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelInactive(AbstractChannelHandlerContext.java:224)

at io.netty.channel.ChannelInboundHandlerAdapter.channelInactive(ChannelInboundHandlerAdapter.java:75)

at org.apache.spark.network.util.TransportFrameDecoder.channelInactive(TransportFrameDecoder.java:182)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:245)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:231)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelInactive(AbstractChannelHandlerContext.java:224)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelInactive(DefaultChannelPipeline.java:1354)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:245)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelInactive(AbstractChannelHandlerContext.java:231)

at io.netty.channel.DefaultChannelPipeline.fireChannelInactive(DefaultChannelPipeline.java:917)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:822)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasksFrom(SingleThreadEventExecutor.java:379)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:354)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:678)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:473)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

at java.lang.Thread.run(Thread.java:745)

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,326 DEBUG Recieve recover signal from 108

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,410 DEBUG Recieve recover signal from 140

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,502 DEBUG Recieve recover signal from 134

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,582 DEBUG Recieve recover signal from 42

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,663 DEBUG Recieve recover signal from 173

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,743 DEBUG Recieve recover signal from 119

19/05/07 18:18:08 INFO RabitTracker$TrackerProcessLogger: 2019-05-07 18:18:08,824 DEBUG Recieve recover signal from 121

19/05/07 18:18:13 INFO RabitTracker$TrackerProcessLogger: Tracker Process ends with exit code 143

19/05/07 18:18:13 INFO RabitTracker: Tracker Process ends with exit code 143

19/05/07 18:18:13 INFO XGBoostSpark: Rabit returns with exit code 143

Exception in thread “main” ml.dmlc.xgboost4j.java.XGBoostError: XGBoostModel training failed

at ml.dmlc.xgboost4j.scala.spark.XGBoost$.ml$dmlc$xgboost4j$scala$spark$XGBoost$$postTrackerReturnProcessing(XGBoost.scala:511)

at ml.dmlc.xgboost4j.scala.spark.XGBoost$$anonfun$trainDistributed$1.apply(XGBoost.scala:404)

at ml.dmlc.xgboost4j.scala.spark.XGBoost$$anonfun$trainDistributed$1.apply(XGBoost.scala:381)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.Iterator$class.foreach(Iterator.scala:893)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1336)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at ml.dmlc.xgboost4j.scala.spark.XGBoost$.trainDistributed(XGBoost.scala:380)

at ml.dmlc.xgboost4j.scala.spark.XGBoostRegressor.train(XGBoostRegressor.scala:188)

at ml.dmlc.xgboost4j.scala.spark.XGBoostRegressor.train(XGBoostRegressor.scala:48)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:118)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:82)

at org.apache.spark.ml.Estimator.fit(Estimator.scala:61)

at com.isf.menasor.trainers.GridSearchTrainer$$anonfun$train$1.apply(GridSearchTrainer.scala:46)

at com.isf.menasor.trainers.GridSearchTrainer$$anonfun$train$1.apply(GridSearchTrainer.scala:38)

at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186)

at com.isf.menasor.trainers.GridSearchTrainer.train(GridSearchTrainer.scala:38)

at com.isf.menasor.Menasor$.main(Menasor.scala:35)

at com.isf.menasor.Menasor.main(Menasor.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:891)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:200)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:230)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:139)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

19/05/07 18:18:13 INFO ShutdownHookManager: Shutdown hook called

19/05/07 18:18:13 INFO ShutdownHookManager: Deleting directory /data0/tmp/spark-10789d6c-7a1c-455a-b5a5-699173df24ab

19/05/07 18:18:13 INFO ShutdownHookManager: Deleting directory /data0/tmp/spark-75b45dcc-4734-44de-b261-f0373cddb0ff