I have used XGBoost for a predictive regression task with a large training set (initially ~1,500,000 samples, 50% subsampled during training). During model optimization I did not encounter any large random accuracy differences during runs.

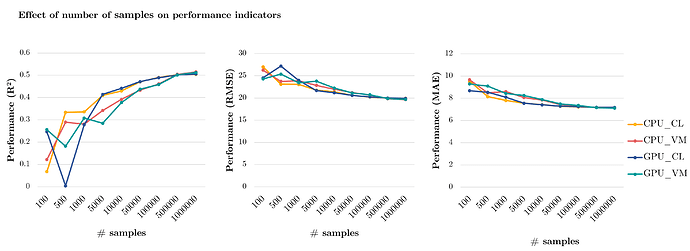

I wanted to do a hardware comparison between the two environments (& CPU/GPU comparison) that I had used for training. During this comparison, I trained the models on an increasingly large dataset, starting at 100 samples and ending at 1,000,000 samples. For each of the for conditions (environment vs CPU/GPU), the subsampled data was similar. If I understand correctly, XGBoost is a deterministic model when there is no randomness introduced during training due to subsampling. However, I encounter a lot of random behaviour when training on smaller training sets (enormous drop in accuracy). This is not always for one condition (e.g., only for GPUs), but can occur for any of the conditions.

In the plot below, one of the comparisons can be viewed, with a large performance drop for the Colab-CPU condition.

The hardware used is:

-

CoLab Pro+ (CL

- GPU: Tesla V100-SXM2 GPU, 16 Gb RAM

- CPU: Xeon CPU (2.00GHz)

-

Virtual Ubuntu 18.04 environment (VM)

- GPU: NVIDIA Quadro RTX 6000, 24 GB RAM

- CPU: Intel Xeon Gold (2.30GHz)

Since the using low amount of samples can lead to unexpected behaviour for all conditions, I thought it could maybe be due to floating point errors, but I would not have expected that this would have such a large effect. Can this be the case? Or am I overlooking something else?

I did find out that on the CoLab environment, XGBoost version 0.90 was used, whereas the 1.5.1 package was used in the VM environment. After finding out that they differ in the min_child_weight default (0.50 versus 1, respectively), I set them both explicitedly to 1, which did not reduce the random behaviour.

Thank you in advance! If further information is needed, please let me know.