Hi,

I am using Databricks (Spark 2.4.4), and XGBoost4J - 0.9.

I am able to save my model into an S3 bucket (using the dbutils.fs.cp after saved it in the local file system), however I can’t load it.

Code and errors are below:

val trainedModel = pipeline.fit(trainUpdated) // train model on pipeline (vectorAssembler + xgbregressor)

create directory to save the pipeline (again, model + vecotr) -

dbutils.fs.mkdirs("/tmp/test-sage")

val trainedModelPath = "/dbfs/tmp/test-sage/m"

Save model in a specific way with -

trainedModel.stages(1).asInstanceOf[ml.dmlc.xgboost4j.scala.spark.XGBoostRegressionModel].nativeBooster.saveModel(trainedModelPath)

Then, copy from dbfs to S3

dbutils.fs.cp("/tmp/test-sage/m", "/mnt/S3/XXXX-data-science/sandbox/save-test-xgboost/model")

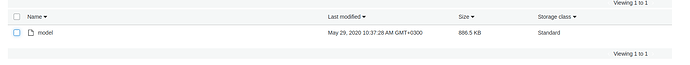

I see a file in S3 called model (see screenshot attached) -

However, when I tried to load using -

val xgb = XGBoostRegressor.load("/mnt/S3/XXXX-data-science/sandbox/save-test-xgboost/model")

I get the error -

org.apache.hadoop.mapred.InvalidInputException: Input path does not exist: /mnt/S3/XXXX-data-science/sandbox/save-test-xgboost/model/metadata

i.e. the saved model is 1 file ( < 1MB) and no metadata file is saved alongside it.

Am I doing something wrong?

From a quick search I see this is a real pitty with XGBoost4J and Spark, so if this will be solved, I will be more than welcome to write a detailed documentation and create a relevant PR for it.

Thanks,

Daniel

@hcho3 FYI