I have a binary classification problem where i am trying to predict default using XGBOOST. I originally have a class imbalance of 5%- upon training i get a accuracy and recall of 0.91 & 0.5 respectively.

I then decide to up-sample the majority class this then brings my new class imbalance to just 25%. Upon training i get a accuracy and recall of 0.7 and 0.75 respectively. I also noticed that my calibration plot was a lot better. This goes against what I thought. I thought that if i up-sampled the minority class it would lead to worse calibration. I thought upsampling/undersampling had same affect as scale pos weight, i.e. it will affect probabilities. but in my case it has lead to better calibration:

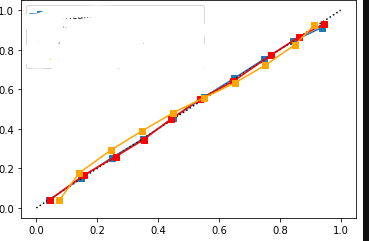

after upsampling:

the brier score of uncalibrated above is 0.15

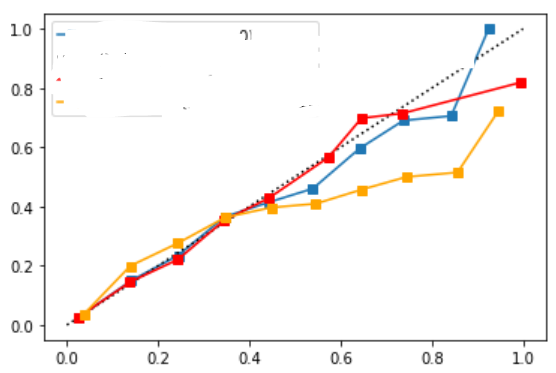

whereas before upsampling the majority class it was:

and the brier score of uncalibrated was 0.05, so actually now the brier was infact less before upsampling and increased to 0.15 AFTER upsampling.

Why is this happening? I thought upsampling lead to worse calibration but from above it looks better.