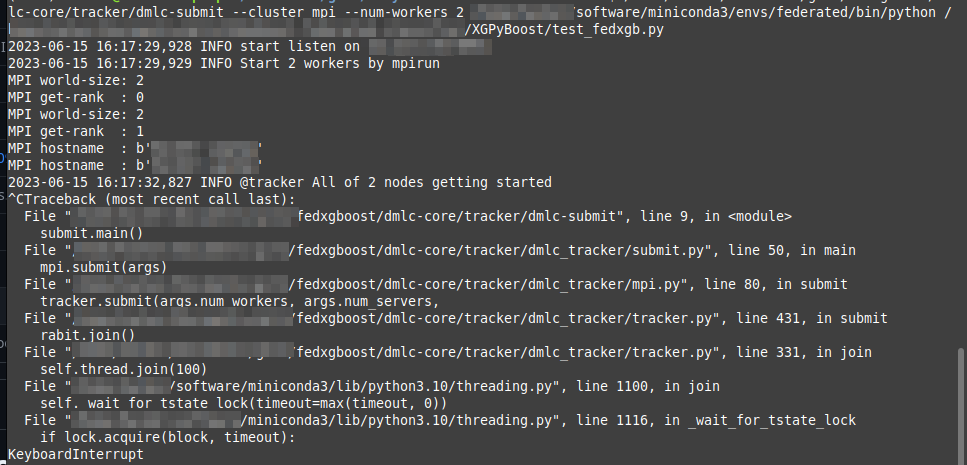

When trying to use DMLC I keep running into the error where DMLC gets seemingly stuck in training. This is what happens after I control+C the program after a long time:

I’m running a script from a federated XGBoost implementation however I only try to build a tree by looking at 200 sample instead of the whole dataset to speed things up (also only 5 trees and a low max_depth).

would anyone know what could maybe cause this?

Thanks in advance