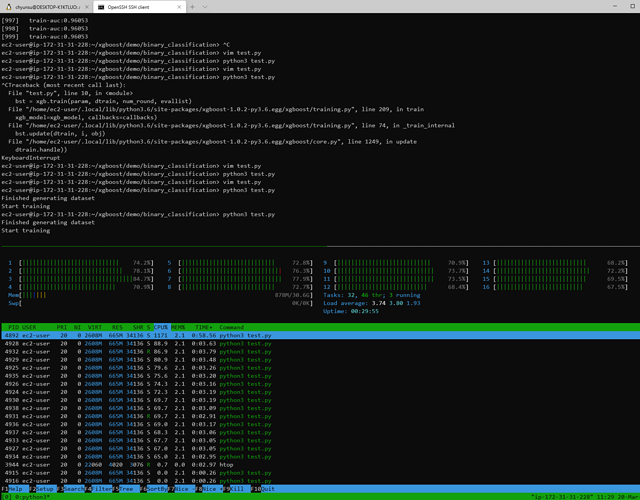

I am trying to run xgboost with multithreading from a conda/python jupyter notebook. Previously, I did so on Mac OS by installing libomp with homebrew, followed by pip install xgboost. However, after moving to a linux machine that runs openSUSE Leap 15.1, I can’t get the multithreading to work.

Things I tried:

Installing libgomp1 from openSUSE repos

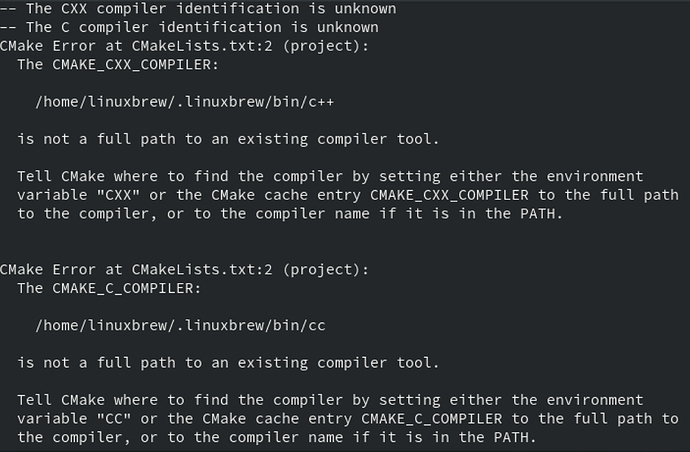

Installing homebrew, then running brew install libomp

Instaling various gcc compilers

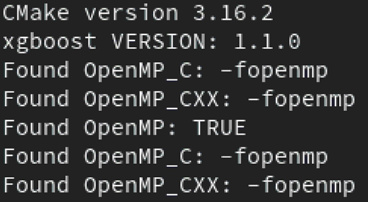

Building xgboost from source (by cloning xgboost github, running cmake and pip install -e .)

Installing xgboost with pip

Installing xgboost with conda

While all of the above seems to complete without raising errors, the multi-threading still doesn’t work.

Not sure what to do from here. I feel that any advice you may have would be helpful at this point. Thanks!

UPDATE: whether the multithreading works or not seems to be dependent on the version of xgboost (see below)