Hello All,

Given I was having issues installing XGBoost w/ GPU support for R, I decided to just use the Python version for the time being. I’m having a weird issue where using “gpu_hist” is speeding up the XGBoost run time but without using the GPU at all.

Computer/Environment Info

CPU: i7 7820x

GPU: Nvidia RTX 2080

OS: Windows 10 Pro (64-bit)

Python: 3.7

I used the binaries posted on here when installing xgboost with GPU support.

Problem:

So, I ran a slightly modified version of the GPU demo script hosted on the xgboost github. The changes were to the print out order and I reduced the boosting iterations to 100 for the sake of sped.

Here is the printout after executing the script:

Notes:

- The GPU version did run faster than the CPU version (which is an OK sign)

- There was no printout information about Memory Allocation for the GPU (which is present in other folk’s

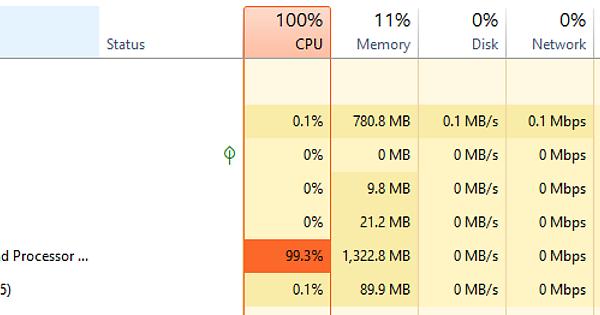

printouts. - Both the CPU version and the GPU version used all available CPU resources and didn’t touch the GPU according to the resource monitor (this behavior occurs regardless of the number of boosting rounds)

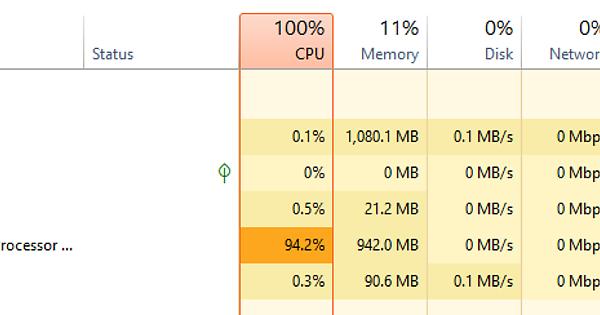

Resource usage with “gpu_hist” set as a parameter:

Note: Although GPU usage here was greater; it was due to applications other than execution of the python script

Resource usage with “hist” (CPU only):

Am I actually using my GPU at all? It seems bizarre that the GPU usage would be so low.

Thanks for any suggestions. I think this will be more straightforward to figure out than the issues with the R package.

PS. Sorry for the imgur links, new users can only post 1 image as an upload.