Hi All,

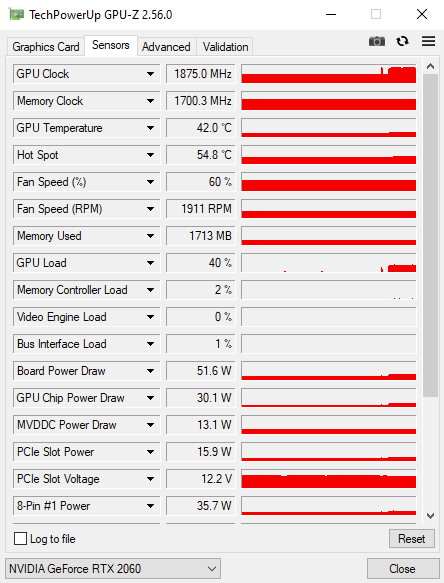

I’m going through this tutorial on xgboost and the author mentioned that using gpu_hist should be “blazing fast” when compared with just passing hist as a parameter. No matter what I do, the GPU utilization seems to be stuck at 40% while my CPU uses all 24 logical cores at 100%, and is nearly twice as fast.

With gpu_hist, the block of code at the bottom of my post took 31.7s and with just hist, it took 14.3 seconds. This difference is consistent with subsequent runs.

Computer Specs

CPU: Ryzen 9 3900x

GPU: RTX 2060

RAM: 32 GB @ 3600 hz

OS: Windows 10

Python: 3.11.5

I’m attempting to run this in a jupyter notebook within VSCode

What I’ve Tried

- removed all packages, and reinstalled via pip

- installed cuda toolkit and cuDNN

- confirmed CUDA directory is added to the path

- confirmed compatibility of my GPU

- used different parameters like

"device":"cuda"and"device:gpu"combined with"hist" - ran 100, 1000, 5000, and 10000 boosts - CPU is consistently faster

Running code block (see bottom of post):

code:

params = {"objective": "reg:squarederror", "tree_method": "hist"}

n = 5000

evals = [(dtrain_reg, "train"),(dtest_reg, "validation")]

model = xgb.train(

params=params,

dtrain=dtrain_reg,

num_boost_round=n,

evals=evals,

verbose_eval=250,

)