I’m new to ML and trying to understand XGBoost better.

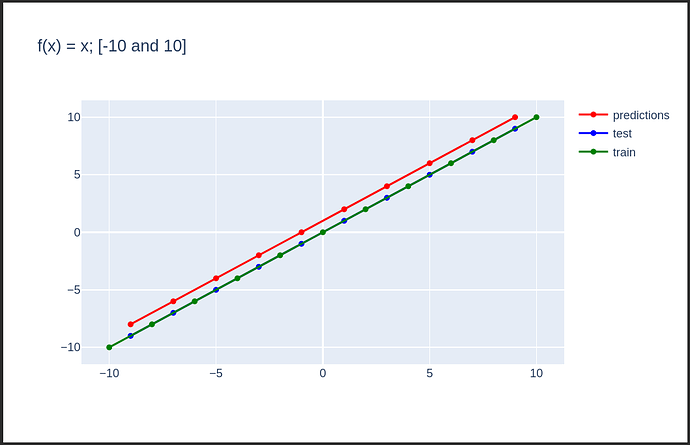

I tried to do a simple example where the data that need to be predicted, can be predicted by simply interpolating two values known from the trainset.

Furthermore, I assume the result that I’m getting is due to the decision tree just using the nearest known value. But I was wondering, is there something obvious that I can do to get a better fitting model?

My two ideas are:

- Augment the traindata by inserting the interpolated data. I tried this and it helps a lot. But I feel like “cheating” as those interpolated datapoints are not from a “real observation”.

- Doing hyperparameter optimization. Which I did not try yet, but from what I’ve read this should be the last resort and is considered more of an improving “final touch”.

Code: https://datalore.jetbrains.com/view/notebook/gAB24epk0tGtYAvmqRX9F6